By John Booth Managing Director of Carbon3IT, Ltd and Haiyan Wu UKERC Operations Manager.

Data centres are complex system of systems containing everything from enterprise servers to cooling equipment. They were originally built and designed to principles first created in the 1950’s – the world has since moved on.

They are heavy energy users, some estimates put their energy use at 1% of the world’s total energy consumption, but as with all statistics, this figure should be treated with considerable caution. There has been a lot of angst within the environmental community that data centre energy use appears to be spiralling out of control and there have been calls for the industry to do better.

The EU recently stated in the EU Green Deal that “Data Centres, can and should be Carbon Neutral by 2030” and raised the prospect of forcing this through via regulatory change. As the UK has left the EU, we will not be required to comply with any EU regulations, but should the UK government adopt a similar approach?

Unfortunately for policymakers the data available generally relates to commercial data centre operators, that provide data centre power, space, and cooling for a multitude of customers, this is commonly known as “colocation” space or simply “colo”. A company will rent space and adopt a common power and cooling strategy that is decided by the operator and supported by “their” Service Level Agreements – these are set artificially low.

Historically, data centre “set points” for temperature have been 18-21°C and for humidity 50%RH-/+5%, giving a range of 45-55RH%, but there has been significant work within the industry to widen these set points in line with the American Society of Heating, Refrigerating and Air-Conditioning Engineers (ASHRAE) recommendations of 18-27°C and 8-80%RH.

These parameters are designed to ensure that the equipment remains covered under warranty, but if you look at a server datasheet you will see that actually the environmental ranges are quite a bit higher, being in the 10-35°C.

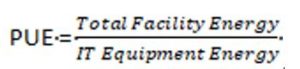

The industry uses a metric, called Power Utilisation Effectiveness, or PUE, this is the ratio of total facility energy divided by the amount of energy used to power the ICT systems and is expressed as a number.

Most Data Centres in the UK are operating at between 1.5 and 1.8, the EU average is 1.8, in some cases even higher. For every 1w of energy provided to the ICT equipment, 0.8 w is supplied to the supporting infrastructure. In Data Centres operating at 2 and above, it means that in effect more energy is being used to provide the supporting infrastructure that is supplied to the ICT equipment. This may mean that more energy is being used to “cool” the infrastructure than is strictly necessary.

The average temperature in the UK ranges between 6°C to 14°C, with some temperature spikes in the summer. So for most of the year this is no need for mechanical cooling at all. Many new data centres are being built with indirect fresh air free cooling, which brings down their PUE, reducing the need for compressors and other refrigeration equipment.

Data Centres are in effect anything from a small room in an office block to hyperscale or cloud facilities, with literally hundreds of thousands of servers. These huge facilities are being built at an astonishing pace, some 450 exist globally with at least another 150 in construction, and the projection is for growth to be around 25% for most of the next decade. There could be between 5-10 colocation facilities in every major city throughout the EU, perhaps not so many in the UK where enterprise data centres – those that are owned and operated by the organisation – are the largest component. A 2017 EU study indicated some 10,500 such facilities in the UK.

The data referred to above is based upon information published by techUK in their “Climate Change Agreement (CCA) for Data Centres” report. It covers 129 facilities from 57 target units, representing 42 companies. However, we must assume that there are some colocation datacentres that are not in the CCA, perhaps because they only have one facility or that the administration requirements outweigh any financial benefits they may receive.

The total energy used for the organisations within the CCA for the 2nd target period was 2.573 TWh per year, with an increase of 0.4 TWh over the previous period, reflecting the growth of the number of participants in the scheme and their activities.

The CCA PUE in the base year was 1.95, and the PUE in the target period was 1.80, an improvement but still far above where it should be.

So the CCA data is from the commercial operators, but what about all businesses? In 2016, there were 5.5 million businesses in the UK, it is safe to assume that not all of them have their own server room. As 5.3 Million are microbusinesses employing less than 10 people, some of these businesses will be using cloud or indeed are so small that they operate with one computer.

There are 33,000 businesses employing 50-249 employees and 7,000 that employ over 250 employees, thus 40,000 businesses would require some sort of central computer room, server room, or data centre. The configurations of these facilities will depend on the business and the criticality of the data they contain. Alongside this, let’s add another 40,000 for non-business IT, including the Government, local authorities, emergency services, universities, schools, and other educational establishments. So, 80,000 in all (approximately).

We still have no idea how big/small these facilities are, so let’s make an assumption that most of these 80,000 are 2-5 cabinets or less and have 50 items each. The average server will consume between 500 and 1000watts an hour. If the average use is 850 watts per hour, multiplied by 24 that equals 20,400 watts daily, or 20.4 kilowatts (kWh). Multiply that by 365 days a year for 7,446 kWh per year.

Networking and storage average out at about 250w per hour, let’s assume that half the IT is servers, and half networking/storage.

We have 50 items so the total IT energy consumption is going to be 240,900 kWh per year.

Assuming these facilities are cooled….badly, so 1watt of IT needs 1w of cooling, we get 240,900 kWh of cooling energy consumption, giving us a grand total of 481,800 kWh, for just one site. At commercial electricity rates this will cost anywhere between 8p – 15p per kWh, so…taking an average electricity cost of 0.12p per kWh.

And then if we multiply by our 80,000 locations we get to…

The total cost of running the 80,000 server rooms is costing the UK anywhere between £4 and 7 billion pounds per annum (depending on actual tariff), using 38.54Twh, which is 11.37% of the electricity generated in the UK in 2016.

If we add the CCA calculation for colocation sites, then we have a total of 41.11TWh representing 12.13% of electricity generated in the UK.

From studies undertaken over the last 5 years or so, the minimum energy savings could be somewhere in the region of 15- 25%. However some organisations may be able to achieve up to 70% but this would be a very radical approach and they would need to be willing to move to a full cultural and strategic change. From the figures above, you could reduce server room energy bills by around £10,000-£25,000, this may not sound very much but multiply that by 80,000 and you get a UK PLC saving of many millions. If consumption were to reduce by this amount then it may also mean that one or two less power stations need to be built, reducing energy bills even more!

Firstly, the best option is to adopt the EU Code of Conduct for Data Centres (Energy Efficiency).

This details 153 best practices that cover management, IT equipment, cooling, power systems, design, and finally monitoring and measurement. The guide is updated annually and is free to download here.

Secondly, monitor and measure, get some basic data – how much energy does your IT use, how much energy is used by providing cooling and uninterruptible power supplies etc.

First measure the actual amount of energy and thus the cost being consumed by the IT estate, for too long organisations have had zero visibility of this figure.

Second calculate the PUE, this is the total amount of energy consumed by the entire facility and divide it by the IT load.

PUE is an “improvement” metric, you calculate your baseline PUE then re-calculate once you have taken some improvement actions. Implementation of best practise may be a problem for some, so undertake training – all of the major global data centre training providers have at least one training course on energy efficiency.

For the last nine years, everything we know about data centre server energy consumption has been estimated, indeed the figures above are estimates, but in this case, probably nearer the actual figures than most. I am prepared to assist and organise a full UK PLC survey of all data centres/server rooms/machine rooms/pops/mobile phone towers/transportation signalling systems if someone is prepared to pay for it. This will be a massive task and should not be underestimated the information that can be garnered will be worth its weight in energy efficiency gold.

We can, I believe reach the carbon neutrality target for data centres in the UK by 2030, but it will need considerable effort by the community and support from the government.

This article has been adapted from an article published in Data Centre Solutions magazine in November 2017, the data was reviewed during the update and still current, however the 3rd reporting period of the CCA is now due to be published by tech UK and we expect the overall figure to increase (this is because some new sites have been added)

John Booth is the Managing Director of Carbon3IT Ltd, an organisation that provides data centre support services such as ISO management standards, EU Code of Conduct for Data Centres (Energy Efficiency) and server room/data centre energy efficiency consultancy. Carbon3IT Ltd also provide Green IT consultancy.

John is the Chair of the Data Centre Alliance – Energy Efficiency steering committee, the Vice Chair of the British Computer Society Green IT specialist group and represents both organisations on the British Standards Institute TCT 7/3 Telecommunications, Installation requirements, Facilities & infrastructures. He is also the Technical Director of the National Data Centre Academy.